- Underwrite.In

- Posts

- Last chance to find the missing link in the insurance industry

Last chance to find the missing link in the insurance industry

Your competitors have already found out.

Imagine getting an email this morning from your underwriting team that states…

🚨 SYSTEM FLAG REQUEST Reason - Name not matching across system.

Shocking.

Simply because somewhere in your data pipeline, a ‘Robert Smith’ has become ‘Rbert Smth”

The system flags this as fraud and you lose close to USD 50,000 in premiums.

Do you think AI can fix this?

AI doesn’t fix bad data. Data fixes data.

AI will only amplify poor data quality. You feed in fragmented information, you get fragmented output.

Data quality will always the driver. AI is just a co-driver. Like in Rally racing.

Did you know that AI in underwriting as market races toward USD 41.1 Billion by 2033.

Yet underwriting firms worldwide pour millions into algorithms while their data sits broken.

It's like buying a Ferrari car and filling it with contaminated fuel.

But…but underwriting firms are saving an estimated USD 6.5 billion annually through automation.

What about that?

Its all good but 54% of these underwriting firms are using fragmented and unstructured data as their biggest barrier to underwriting with AI transformation.

This barrier between poor data quality and AI adoption is exactly why we introduced Underwrite.In.

Good data quality has to go from - source to final report, otherwise what’s the point?

Our AI-powered underwriting assistant isn't just another AI tech solution, it's the complete data quality enriching underwriting transformation.

A quick view on how Underwrite.in's ensures top notch data accuracy.

An underwriting system designed specifically for your underwriting complexities of modern risk assessment.

Your AI-powered, data quality enriching, underwriting assistant that automates routine tasks, optimizes data management, and delivers insights that empower faster, real-time informed decisions.

Go ahead! Try it out for yourself and see the change.

What consequences can you face with poor data?

Manual underwriting has inherited decades of ‘bad data’ habits.

❌Legacy systems.

❌Siloed departments.

❌Unstructured documents.

❌Manual entry errors.

The consequences are severe.

Your underwriters face inaccurate underwriting data.

This leads to additional information being requested to a customer. This increases turnaround times. Your slow business gets lost to competitors.

McKinsey states that underwriters spend 30-40% of their time on operational tasks.

⏳Mostly manual work.

⏳Data cleaning.

⏳Clarification requests.

⏳System reconciliation.

This isn't just inefficiency. It's operational paralysis. Your underwriting team is facing three major challenges.

Data fragmentation across systems

You are storing data in hundreds of source systems organized in discrete silos.

Underwriting has one database. Claims is another. Marketing a third database.

The same information exists in different formats across platforms.

Nothing connects seamlessly.

Your team spends hours gathering and organizing scattered data.

Imagine letting AI agents handle all your data sources. We got a nice webinar for you where you learn exactly how to scale, despite that data being fragmented.

Book a seat by clicking on the link below. Hurry! Limited seats only!!!

Unstructured data mess

54% of insurance executives like you find it difficult in extracting and using unstructured data as their top barrier.

❗Emails.

❗PDFs.

❗Handwritten notes.

❗Spreadsheets titles “Final_final_reallyfinal.doc”.

❗Images.

Traditional systems can't parse this unstructured mess of an information.

Artificial Intelligence also needs structured inputs. The gap between data format and AI requirements creates friction.

Validation and governance gaps

Poor data quality increases costs associated with process re-execution due to errors.

Correction efforts multiply. Revenue targets get missed.

Legacy systems lack safeguards or validation checks found in modern solutions. Inaccuracies persist. They compound over time.

The financial impact? Staggeringly high.

Poor data quality doesn't just slow processes.

It undermines trust.

Only 43% of underwriters trust and regularly accept automated recommendations from predictive analytics tools. Many cite concerns around complexity and data integrity.

When underwriters don't trust the data, they won’t trust AI.

The culprit?

Data quality issues plaguing implementation.

Breaking the cycle of AI distrust and poor data

Breaking this cycle requires confronting a reality.

Your data infrastructure was never designed for AI.

Manual processes scaled through human judgment. AI requires digital precision.

The transformation begins with accepting this gap exists.

Then systematically closing it.

Resistance to AI underwriting isn't about technology fear.

It's about trust deficit.

Capgemini states that only 8% of underwriters qualify as ‘trailblazers’ who consistently outperform by leveraging AI-driven insights.

What separates them from the rest?

They solved the data quality problem first.

Traditional underwriting relies on human expertise navigating incomplete information.

Underwriters fill gaps through experience. They interpret ambiguous documents. They make judgment calls.

This works until volume exceeds human capacity.

AI-powered underwriting demands a different foundation.

Algorithms don't "interpret" missing data. They either have quality inputs or produce unreliable outputs.

The mindset shift requires accepting three principles:

Data quality precedes AI deployment

Automation enhances, not replaces your expertise

Continuous improvement through feedback loops

You can't patch data problems with better AI.

Clean data enables simple AI to outperform sophisticated algorithms on messy inputs.

AI handles data processing. Humans handle judgment.

This requires trusting AI for routine tasks. Reserving expertise for complex cases. The value shifts from data gathering to decision-making.

Let’s understand this data quality importance in underwriting through an example.

Allianz UK's BRIAN transformation

Allianz UK confronted a familiar challenge.

Underwriters navigated hundred-page documents searching for specific answers.

Decision-making took days, sometimes weeks.

Their solution?

BRIAN. A GenAI underwriter guidance tool built on quality data foundations.

This AI underwriting implementation focused on the ‘data first’ principle.

Document digitization - All property, claims and casualty guides got converted to structured formats

Source verification - BRIAN exclusively uses uploaded and validated documents

Citation transparency - Every answer links back to source material. No random, out-of-the blue filler data.

BRIAN processed nearly 3,000 questions from 190 users during pilot phase.

Now it's in full production.

The result?

260 underwriters currently use BRIAN across all UK commercial offices.

Plans to scale to 600+ underwriters within 12 months.

Time savings through instant information retrieval

Enhanced data accuracy by sourcing from verified documents only.

BRIAN's success didn't come from advanced algorithms.

It came from solving the data quality problem. Clean, structured, verified information enabled AI to deliver value.

The same technology on messy data would have failed.

How does good data impact your underwriting process?

AI-powered claims automation reduces processing time by up to 70%.

But only when data flows cleanly through systems.

Underwriters serve more customers with higher efficiency.

Increased speed through automation

A quick view of Underwrite.in's automation at work.

AI-powered underwriting reduces average underwriting decision time from three to five days to 12.4 minutes for standard policies.

Data accuracy of 99.3% accuracy in achieved for risk assessment.

Manual data gathering consumed most traditional underwriting time. AI eliminates this bottleneck when data exists in accessible formats.

Enhanced precision through pattern recognition

A quick view of Underwrite.in's dynamic dashboard to maintain accurate data.

AI-powered underwriting improves data accuracy to 54% by analyzing datasets humans cannot process.

AI-powered underwriting identifies correlations across millions of data points. Risk factors that’s invisible in manual review become apparent.

The competitive advantage doesn't come from having AI. It comes from having AI powered by quality data.

For example, your underwriter can start working on Underwrite.In’s centralized mailbox for gathering insurance details?

No more asking “where did my excel sheet go?”.

Plus, he/she can use Underwrite.In dynamic dashboard to keep track of where the data is actually stored to make quick, accurate decisions?

How Underwrite.In drive you towards underwriting success?

Quality data doesn't come accidentally.

It requires intelligent automation.

Continuous validation. Real-time processing.

This is where Underwrite.In fundamentally changes the game.

Traditional AI implementations force you to choose: powerful features or simple implementation. At Underwrite.In, we recognized this creates a false dichotomy.

Our platform solves data quality problems at the source by,

Automating data extraction

Forget manual data entry consuming 30-40% of underwriter time. Our AI-powered system extracts information from emails, PDFs, and documents automatically.

But here's the difference: we don't just extract data. We validate, structure, and normalize it. Every submission becomes decision-ready information, not raw documents requiring interpretation.

Creating a single source of truth

Data scattered across systems kills AI effectiveness.

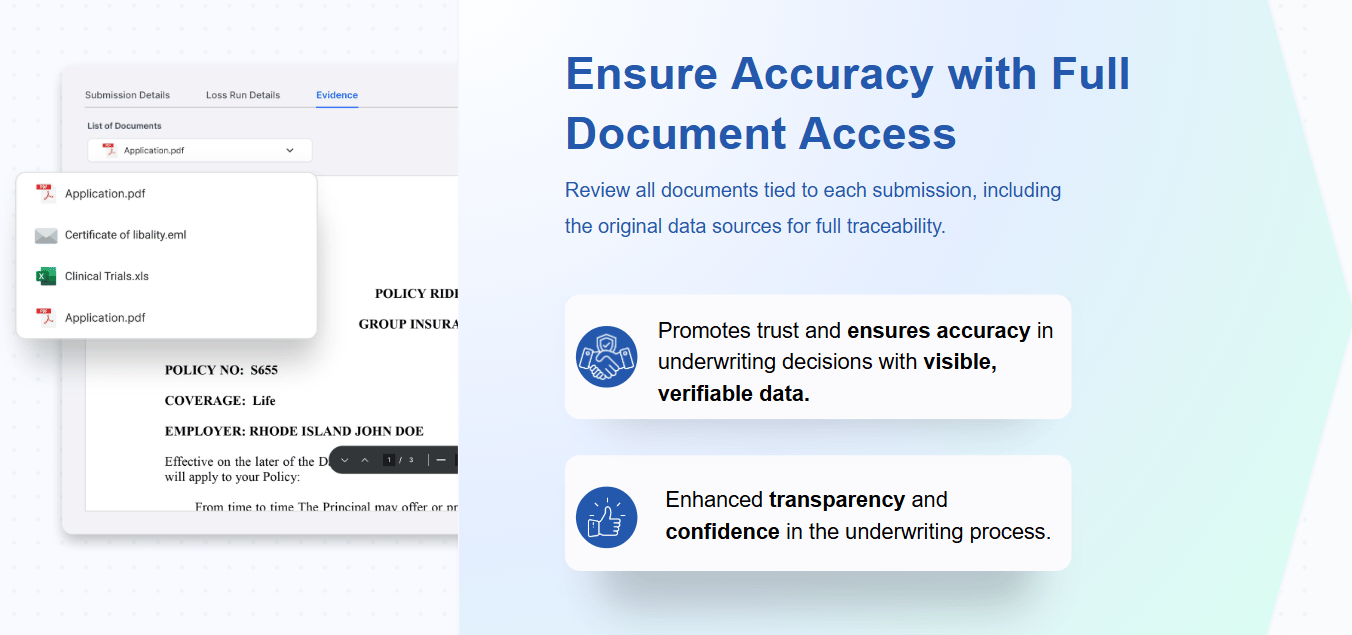

We consolidate everything into one secure repository.

All documents tied to submissions live in one place. You get full traceability and complete transparency..

No more hunting across platforms or reconciling conflicting information.

Delivering real-time quality and validation

Data quality isn't a one-time achievement. It requires continuous monitoring.

Underwrite.In tracks data completeness, accuracy, and consistency in real-time.

Red flags surface immediately.

Gaps get filled before they impact decisions.

“Underwriting in AI requires shifting away from legacy models by modernizing core systems and deploying advanced technologies that drive better outcomes and transparency.”

Competitive pressures intensify.

The question isn't whether to prioritize data quality.

It's whether you'll act before it's too late.

Data quality that go beyond simple automation

Underwrite.In’s intelligent automation integrates seamlessly with your existing ideology and process habits. No massive overhauls required. No lengthy implementation periods. No business disruption.

You choose this intelligent automation to,

get data-first architecture which ensures AI-powered data accuracy remains 100% from day one.

see real-time performance of underwriters, along with deeper insights and recommendations for complex cases.

maintain data quality, along with measurable improvements in processing speed and decision making.

I suggest you schedule a personalized, one-to-one demo with our underwriting experts to see how you can automate your underwriting tasks for quicker TaT.

We'll show you exactly how a real insurance claim submission flows within your system, from email receipt to AI-assisted decision-making. No buzzwords, no complexity, just the complete underwriting transformation that gives your underwriters their time back to become more effective.

The AI revolution in underwriting is real.

The underwriting market will grow from USD 2.85 billion in 2024 to USD 674.1 billion by 2034. But success won't come from algorithms alone.

91% of companies adopted AI technologies. Yet most struggle to deploy even one use case successfully.

The bottleneck isn't computational power. It's data quality.

Companies, such as yours, solving this foundational challenge will dominate their markets.

Those ignoring it will waste millions on AI implementations that never deliver promised ROI.

The choice is yours. Fix data first or watch competitors pull ahead. Transform or die.

Team Underwrite.In

How did you like our newsletter? |