- Reinsured.ai

- Posts

- The hidden risk of AI underwriting no one talks about

The hidden risk of AI underwriting no one talks about

But we will...

Microscope on the following

When & why AI underwriting decisions become risky

How to build ethical, explainable AI your team can trust

How explainability protects your company from regulators

In this last edition of 2025, we want to talk about the parts of AI underwriting most solution builders quietly skip over.

Not model accuracy. Not speed. Not automation demos.

But the uncomfortable stuff: accountability, explanability, and what happens after AI starts influencing real underwriting decisions.

Because AI-driven underwriting doesn’t become risky because the technology is broken.

It becomes risky when your company can’t clearly explain why a decision was made, how risk was evaluated, or who ultimately owned the call.

And in insurance, no underwriting decision exists in isolation.

Every approval or decline has a long tail. It shapes broker trust, regulatory scrutiny, portfolio health, and eventually, your capital position. These decisions echo long after the policy is bound.

So when AI starts influencing those calls without enough transparency, the risk doesn’t go away, it simply shifts into places your dashboards don’t always show.

That’s the part worth paying attention to.

The first danger point is high-stakes ambiguity

AI performs best on clean, well-labeled, repeatable risks.

But most underwriting decisions your team worries about aren’t clean. They sit in the grey zone like new industries, changing geographies, evolving policy wordings, borderline submissions.

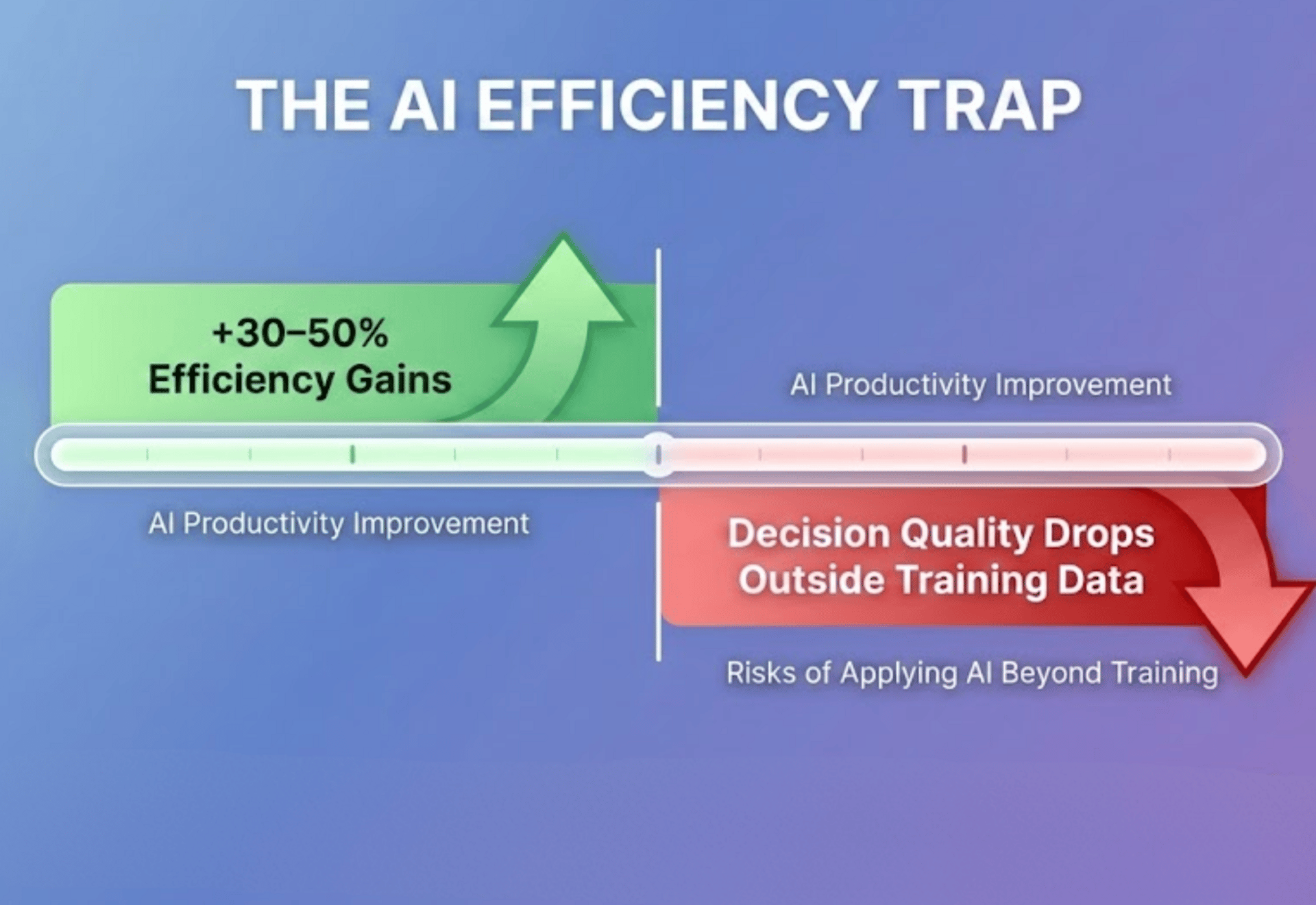

Research shows that while AI can improve efficiency by 30–50%, decision quality drops sharply when models are applied beyond the scenarios they were trained on. In underwriting, that’s where losses are born.

The second risk emerges around accountability gaps

If an AI-assisted decision leads to adverse outcomes like mispricing, unfair rejection, regulatory questions, “the model recommended it” isn’t a defensible answer.

Regulators increasingly expect insurers to explain “why” a risk was accepted or declined, not just how fast it was processed.

According to Deloitte, over 60% of insurance executives now cite explainability as a top concern in AI adoption, ranking it above raw accuracy.

The third risk is false confidence

AI outputs often look clean and precise, which can make them feel more reliable than they really are.

Over time, teams may stop questioning recommendations, not because they agree with them, but because they trust the system. In complex underwriting decisions, that’s where costly mistakes tend to creep in.

How to build ethical, explainable AI?

Designing ethical and explainable AI workflows in underwriting sounds straightforward in theory.

In practice, it’s one of the hardest things for insurance leaders to get right.

Most companies don’t fail at AI because the models are weak. They fail because the surrounding workflow is fragmented, opaque, and impossible to defend once a decision is questioned.

Founders often assume the answer is to build internally - stitch together data pipelines, integrate third-party feeds, layer explainability tools on top, and hope it all holds together under regulatory or broker scrutiny.

In reality, that approach introduces more risk than it removes.

You end up with a complex system that your team didn’t design for underwriting first, and one that’s difficult to explain consistently across decisions.

Ethical, explainable AI in underwriting starts with a simpler principle: your team must be able to see, trace, and explain every signal that influences a decision. Not just the outcome.

That’s why using a ready-made underwriting platform purpose-built for explainability is often the safer and smarter route.

Underwrite.In is designed around this exact requirement. Instead of treating explainability as an add-on, it builds it into the workflow from the ground up through multi-source data integration.

For your team, that means risk decisions are never based on a single input or isolated dataset.

Underwrite.In automatically consolidates information from internal systems, third-party data sources, real-time inputs, and historical records into a single, coherent risk profile.

This matters more than most leaders realize. Industry studies show that underwriting decisions made on incomplete or siloed data are significantly more likely to be challenged, overridden, or reversed later in the policy lifecycle.

In fact, insurers operating across fragmented data environments report up to 30% higher rework and escalation rates.

With Underwrite.In, your underwriting team can trace exactly where each data point comes from, how recent it is, and how it compares to historical behavior.

Real-time updates ensure decisions reflect current conditions, while historical analysis adds context without anchoring your team to outdated norms.

This is what ethical AI looks like in practice.

When a broker questions a decision, your team doesn’t fall back on “the system flagged it.” They can point to the specific data sources, trends, and changes that informed the outcome.

When regulators ask how a risk was evaluated, there’s a clear, auditable trail. When leadership reviews portfolio shifts, the reasoning is transparent, not buried inside a model.

Research also shows that explainable AI workflows lead to 20–25% higher adoption and confidence levels among underwriting teams, compared to rule-only systems.

Here’s another reason why a reliable AI is important:

Explainability isn’t just an internal best practice. It’s one of the strongest defenses your company has when regulators start asking questions.

And those questions are becoming more common.

Over the past few years, regulators across major insurance markets have made it clear that automated and AI-assisted underwriting decisions must be transparent, auditable, and fair.

In the U.S., state insurance departments now routinely ask insurers to demonstrate how underwriting models avoid unfair discrimination.

In the EU, GDPR’s “right to explanation” provisions give policyholders the ability to question automated decisions.

Similar expectations are emerging in the UK, Singapore, and Australia.

According to a Deloitte survey, over 70% of insurance executives say regulatory scrutiny around AI and automated decision-making has increased significantly in the last two years.

This is why DataManagement.AI focus heavily on Policyholder Risk Profiling as a foundational layer for ethical underwriting.

Instead of treating risk as a single output number, your team works with a structured breakdown of what actually drove the classification.

Basic demographics like age, region, and marital status are combined with employment details such as occupation and income.

Lifestyle indicators like smoking, alcohol use, wellness scores —are incorporated alongside policy metadata like tenure and coverage type. Recent medical or claims-related signals are pulled in context, not isolation.

The result isn’t just a risk label. It’s a composite risk profile with an attribute-level explanation.

What are other practical benefits of ethical and explainable AI?

Audits become reviews instead of investigations

Market expansion conversations move faster

Product approvals face fewer delays

There’s also a financial upside.

According to PwC, insurers that invest early in explainable AI frameworks reduce regulatory remediation costs by up to 30%, largely by avoiding rework, model withdrawals, and prolonged review cycles.

If there’s one takeaway from all of this, it’s simple:

AI in underwriting isn’t risky because it’s powerful.

It’s risky when it’s unaccountable.

That’s why the future of underwriting AI isn’t fully autonomous models making silent calls in the background.

It’s decision-support systems that surface context, show their reasoning, and keep humans accountable at the point where judgment actually matters.

Founders who get this right don’t treat explainability as a compliance checkbox. They treat it as a strategic advantage.

This shift is already visible across the industry.

The Gen Matrix Q4 Report highlights a clear pattern among AI leaders in insurance. It features leaders who invested early in explainable workflows, human-in-the-loop systems, and structured risk intelligence.

As a result, they’re scaling faster without triggering loss creep, regulatory friction, or erosion of underwriting judgment.

And as underwriting continues to evolve, the companies that truly win won’t be the ones chasing the most complex models. They’ll be the ones whose teams can confidently explain why a decision was made, and stand behind it.

If you want your underwriting team to start seeing real ROI from AI as you head into 2026, it starts with clarity, not complexity.

Book a free demo and see how Underwrite.In helps your team.

Team Underwrite.In